LiteAPI

108

Overview

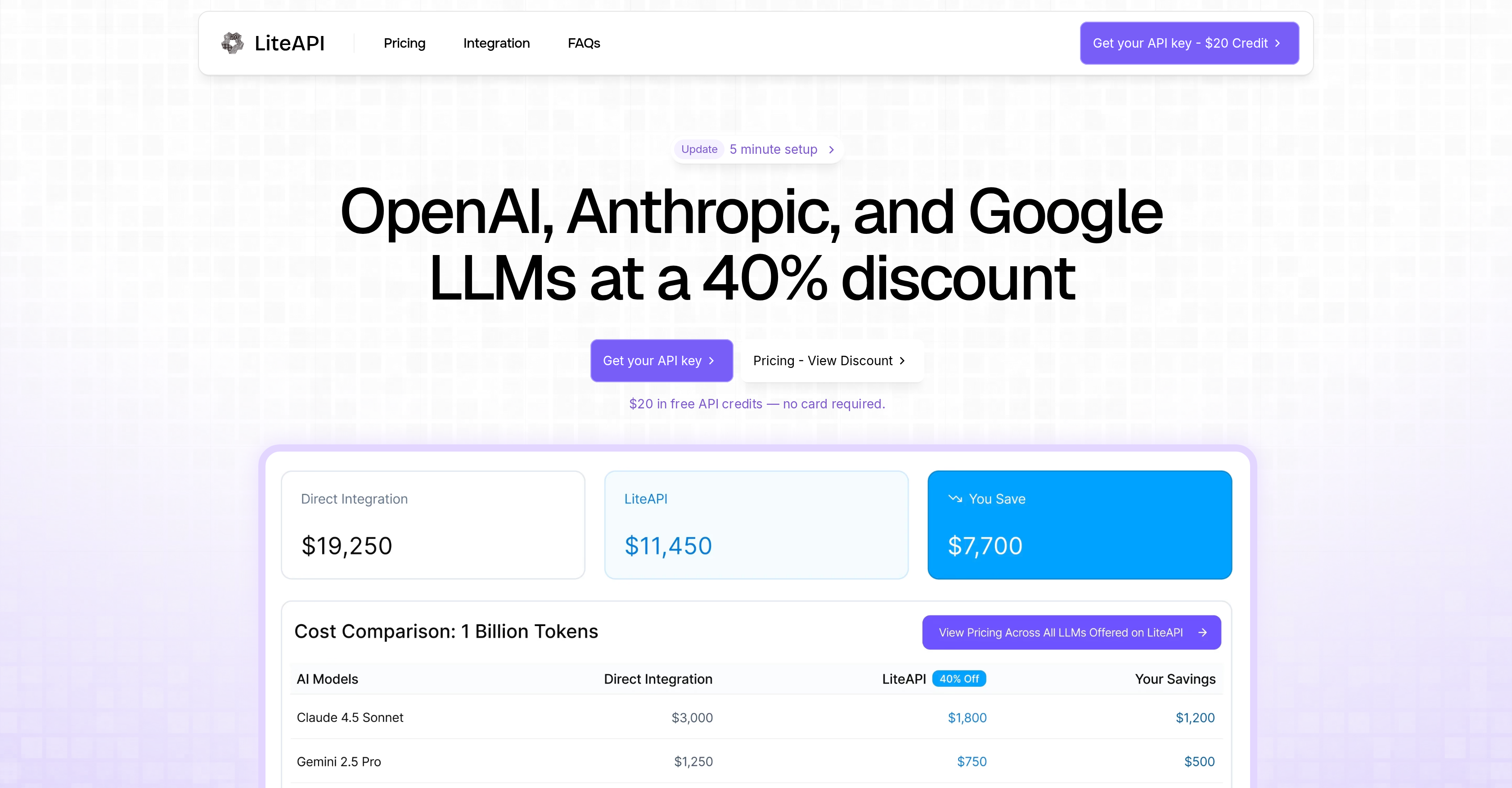

- Cut LLM API costs by up to 40% through preferred contracts secured with major providers

- Access multiple LLM providers through one unified API endpoint, eliminating integration complexity

- Monitor spending and performance with detailed analytics tracking requests, tokens, and costs across all providers

- Deploy with enterprise-grade reliability using automatic failover and edge deployment for maximum uptime

- Protect sensitive data with TLS 1.3 encryption and AES-256 key storage, with no prompt logging

- Scale team collaboration efficiently using shared API credits for collective testing and development

- Migrate seamlessly from existing OpenAI implementations with full API format compatibility

- Maintain production readiness with support for text, vision, embeddings, and function calling capabilities

Pros & Cons

Pros

- Unified API interface

- Supports several LLM providers

- Enterprise-grade performance

- Automatic failover for uptime

- Shared API credits

- Multi-provider support

- Usage analytics

- Seamless integration across languages/frameworks

- High-security protocols

- Traffic encryption

- Key secure storage

- User data privacy

- Broad model capabilities

- Secures preferred contracts

- Potential cost savings

- Supports text analysis

- Supports vision capability

- Embeddings support

- Function calling support

- High scalability

- Edge-deployment strategy

- Doesn't use user data for model improvement

- 40% LLMs discount

- $20 API Credit

Cons

- Discounts are variable

- Possible price changes

- Extra latency (15ms)

- Setup may be complex

- Non-uniform LLM capabilities

- No mentioned data backup

- No training with user data

- Security dependant on providers

- May require large spend for discounts

Reviews

Rate this tool

Loading reviews...

❓ Frequently Asked Questions

LiteAPI is an artificial intelligence tool that provides a unified API interface to language learning model (LLM) providers like OpenAI, Anthropic, and Google. It is built with an array of features designed to address various needs in AI development and deployment. It provides a platform for seamless integrations where any language or framework can be used by simply changing the API endpoint. By securing preferred contracts, LiteAPI is also able to offer potential cost savings to users.

LiteAPI supports several LLM providers, namely OpenAI, Anthropic, and Google.

The major features of LiteAPI include enterprise-grade performance, automatic failover for optimal uptime, shared use of API credits across team members, compatibility with the OpenAI API format for seamless integration and migration, multi-provider support providing connection to various LLM providers through a single gateway, and usage analytics for tracking requests, tokens used, response times, and costs across all providers.

LiteAPI ensures enterprise-grade performance by being built for scale. This means it has been designed to handle a large volume of work and service multiple users concurrently without compromising on speed or efficiency.

The automatic failover feature of LiteAPI is designed for optimal uptime, so your AI operations are not hampered. If a primary system fails, its functions get automatically switched over to a backup system to ensure uninterrupted service.

LiteAPI is designed for team use. It provides shared use of API credits across team members, empowering teams to experiment, test, and build collectively.

LiteAPI's compatibility with the OpenAI API format means you can transition to LiteAPI from OpenAI seamlessly, without having to overhaul your existing setup. This compatibility makes migration and integration with other systems hassle-free.

LiteAPI's multi-provider support works by connecting its users to various LLM providers through a single gateway. This eliminates the need for multiple interfaces, making it convenient for users to switch between different language learning models.

LiteAPI offers extensive usage analytics. You can track requests, tokens used, response times, and costs across all providers. This helps in monitoring and allocating resources efficiently.

Yes, LiteAPI can integrate with any language or framework. It provides an environment for seamless integrations. Keeping your existing code intact, you just need to change your API endpoint.

LiteAPI follows strict data security protocols. All server traffic is encrypted; user keys are stored securely with AES-256 encryption; and there is a clear policy not to use or store prompt data for model improvement. In other words, user data is neither logged nor stored. Provider training is also opt-out by default.

LiteAPI supports a wide range of model capabilities including text, vision, embeddings, and function calling.

LiteAPI helps users save costs by securing preferred contracts. Through these contracts, API credits can be acquired at discounted rates, leading to direct cost savings for users.

LiteAPI offers API credits at a discount by being an AI aggregation platform first. By securing preferred contracts and credits from cloud partners, model providers, and VCs, they are able to pass on inference savings directly to the customers.

The key difference between LiteAPI and other API interfaces like OpenRouter lies in their focus. Unlike OpenRouter, LiteAPI focuses solely on production-grade models from providers like OpenAI, Anthropic, and Google, providing an aggregation layer and variable discounts on inference.

Yes, LiteAPI is secure. Provider training is opt-out by default. They set flags so user data is not used to improve the models of OpenAI, Anthropic, or Google. All traffic is encrypted with TLS 1.3, and user keys are stored securely with AES-256 encryption. LiteAPI never logs or stores prompt data. User prompts or completions are not used or stored for training or analytics.

Yes, LiteAPI supports all major model capabilities including text, vision, embeddings, and function calling where available from the provider.

Yes, you can get custom pricing or enterprise plans with LiteAPI. Teams spending over $50, 000/month on LLM usage can contact LiteAPI for custom discounts and dedicated support.

Using LiteAPI does add a small latency compared to calling the providers directly. LiteAPI's edge routing typically adds less than 15 ms on top of the model latency. However, for most workloads, the significant cost savings far outweigh this overhead.

Getting started with LiteAPI is straightforward. You simply need to redeem your $20 API credit, and then you're ready to begin.

LiteAPI provides an enterprise-grade performance through its focus on scalability and reliability. Tailoring its services for large scale businesses, its edge deployment strategy ensures the highest possible uptime. The automatic failover mechanism implemented by LiteAPI further reinforces its quality of service by maintaining optimal performance under all conditions.

The multi-provider support feature of LiteAPI is extremely beneficial as it simplifies the process of connecting to various language learning model (LLM) providers. Instead of managing multiple APIs for different providers, a user can interact with OpenAI, Anthropic, and Google LLMs through a unified gateway, thus reducing complexity and accelerating project timelines.

Automatic failover in LiteAPI plays a critical role in maintaining service availability. In case a primary system fails, the automatic failover function initiates a system switch to a backup system or redundant system, therefore, ensuring virtually uninterrupted service and optimal uptime, supplying robust performance under all circumstances.

LiteAPI facilitates team collaboration by allowing shared use of API credits. This feature empowers teams to experiment, test, and build applications collectively, using a common pool of resources. This enables efficient workload management and fosters a cooperative environment.

To integrate LiteAPI with the OpenAI API format, users simply have to change their existing API endpoint. LiteAPI's compatibility with OpenAI's API format allows for a hassle-free and seamless migration and integration process.

LiteAPI's usage analytics feature offers a way for users to monitor and track key metrics such as requests, tokens used, response times, and costs across all LLM providers. The insights generated help identify trends, measure performance, optimize resources, and make informed decisions, thus contributing positively to project management and execution.

LiteAPI maintains high-grade security by encrypting all traffic using Transport Layer Security (TLS) 1.3 and storing user keys with Advanced Encryption Standard (AES)-256 encryption. It further ensures data privacy by not logging or storing prompt data and prevents user data from being used for model improvement, thus demonstrating its commitment to user data integrity and confidentiality.

LiteAPI supports a wide range of model capabilities including but not limited to text, vision, embeddings, and function calling. This flexibility caters to various project needs, making LiteAPI versatile and applicable in myriad use-cases.

LiteAPI secures preferred contracts and credits from cloud partners, model providers, and venture capitalists. It then passes these savings directly onto its customers. This approach stands as a secondary benefit of using LiteAPI and can result in significant cost savings for the users.

LiteAPI leverages its status as an AI aggregation platform to secure preferred contracts and credits from cloud partners, model providers, and venture capitalists. These savings are past onto the customers, resulting in a 40% discount on OpenAI, Anthropic, and Google LLMs.

Switching to LiteAPI from a different API is made easy by its compatibility with the OpenAI API format. By changing the existing API endpoint, users can have a seamless transition and continue their activities without needing to rewrite their code.

LiteAPI can be used with any programming language or framework. Its completeness and flexibility facilitate seamless integration and enable it to function effectively across diverse development environments.

Anyone can obtain an API key for LiteAPI by signing up on their website. The sign-up process even includes a $20 credit, allowing users to start integrating and testing the service immediately.

LiteAPI handles data encryption and key storage by encrypting all traffic with Transport Layer Security (TLS) 1.3, ensuring secure communication. User keys are stored with Advanced Encryption Standard (AES)-256 encryption adding an extra layer of security and protecting against unauthorized access.

The unified API interface offered by LiteAPI simplifies the process of integrating with multiple LLM providers. It provides a consistent format for interacting with OpenAI, Anthropic, and Google LLMs, making the transition between providers seamless and reducing integration complexity.

Usage analytics provided by LiteAPI offer invaluable insights into requests, tokens used, response times, and costs across all connected providers. This data can empower you to optimize resource usage, improve performance, make informed decisions, and ultimately enhance the efficiency and effectiveness of your project.

LiteAPI supports a range of features including text analysis, vision capability, embedding support, and more by offering a wide array of model capabilities. These features allow you to utilize LiteAPI for myriad applications and project requirements, making it an adaptable and highly functional tool.

As an AI aggregation platform, LiteAPI is in a position to secure preferred contracts and credits from partners and pass those savings onto the customers. This results in cost-saving benefits for its users, potentially offering API credits at a discount.

LiteAPI, by virtue of its position as an AI aggregation platform, can secure preferred contracts that may vary over time due to changing deals. The cost savings that result from these preferred contracts are transferred directly to the users, providing them a beneficial economical outcome.

Integrating LiteAPI in an existing system involves changing your current API endpoint to LiteAPI's. The service's compatibility with different programming languages and frameworks, combined with its support for the OpenAI API format, allows for easy and seamless integration into pre-existing systems.

LiteAPI's compatibility with the OpenAI API format means you can transition to LiteAPI from OpenAI seamlessly, without having to overhaul your existing setup. This compatibility makes migration and integration with other systems hassle-free.

LiteAPI's multi-provider support works by connecting its users to various LLM providers through a single gateway. This eliminates the need for multiple interfaces, making it convenient for users to switch between different language learning models.

LiteAPI offers extensive usage analytics. You can track requests, tokens used, response times, and costs across all providers. This helps in monitoring and allocating resources efficiently.

Yes, LiteAPI can integrate with any language or framework. It provides an environment for seamless integrations. Keeping your existing code intact, you just need to change your API endpoint.

LiteAPI follows strict data security protocols. All server traffic is encrypted; user keys are stored securely with AES-256 encryption; and there is a clear policy not to use or store prompt data for model improvement. In other words, user data is neither logged nor stored. Provider training is also opt-out by default.

LiteAPI supports a wide range of model capabilities including text, vision, embeddings, and function calling.

LiteAPI helps users save costs by securing preferred contracts. Through these contracts, API credits can be acquired at discounted rates, leading to direct cost savings for users.

LiteAPI offers API credits at a discount by being an AI aggregation platform first. By securing preferred contracts and credits from cloud partners, model providers, and VCs, they are able to pass on inference savings directly to the customers.

The key difference between LiteAPI and other API interfaces like OpenRouter lies in their focus. Unlike OpenRouter, LiteAPI focuses solely on production-grade models from providers like OpenAI, Anthropic, and Google, providing an aggregation layer and variable discounts on inference.

Yes, LiteAPI is secure. Provider training is opt-out by default. They set flags so user data is not used to improve the models of OpenAI, Anthropic, or Google. All traffic is encrypted with TLS 1.3, and user keys are stored securely with AES-256 encryption. LiteAPI never logs or stores prompt data. User prompts or completions are not used or stored for training or analytics.

Yes, LiteAPI supports all major model capabilities including text, vision, embeddings, and function calling where available from the provider.

Yes, you can get custom pricing or enterprise plans with LiteAPI. Teams spending over $50, 000/month on LLM usage can contact LiteAPI for custom discounts and dedicated support.

Using LiteAPI does add a small latency compared to calling the providers directly. LiteAPI's edge routing typically adds less than 15 ms on top of the model latency. However, for most workloads, the significant cost savings far outweigh this overhead.

Getting started with LiteAPI is straightforward. You simply need to redeem your $20 API credit, and then you're ready to begin.

LiteAPI provides an enterprise-grade performance through its focus on scalability and reliability. Tailoring its services for large scale businesses, its edge deployment strategy ensures the highest possible uptime. The automatic failover mechanism implemented by LiteAPI further reinforces its quality of service by maintaining optimal performance under all conditions.

The multi-provider support feature of LiteAPI is extremely beneficial as it simplifies the process of connecting to various language learning model (LLM) providers. Instead of managing multiple APIs for different providers, a user can interact with OpenAI, Anthropic, and Google LLMs through a unified gateway, thus reducing complexity and accelerating project timelines.

Automatic failover in LiteAPI plays a critical role in maintaining service availability. In case a primary system fails, the automatic failover function initiates a system switch to a backup system or redundant system, therefore, ensuring virtually uninterrupted service and optimal uptime, supplying robust performance under all circumstances.

LiteAPI facilitates team collaboration by allowing shared use of API credits. This feature empowers teams to experiment, test, and build applications collectively, using a common pool of resources. This enables efficient workload management and fosters a cooperative environment.

To integrate LiteAPI with the OpenAI API format, users simply have to change their existing API endpoint. LiteAPI's compatibility with OpenAI's API format allows for a hassle-free and seamless migration and integration process.

LiteAPI's usage analytics feature offers a way for users to monitor and track key metrics such as requests, tokens used, response times, and costs across all LLM providers. The insights generated help identify trends, measure performance, optimize resources, and make informed decisions, thus contributing positively to project management and execution.

LiteAPI maintains high-grade security by encrypting all traffic using Transport Layer Security (TLS) 1.3 and storing user keys with Advanced Encryption Standard (AES)-256 encryption. It further ensures data privacy by not logging or storing prompt data and prevents user data from being used for model improvement, thus demonstrating its commitment to user data integrity and confidentiality.

LiteAPI supports a wide range of model capabilities including but not limited to text, vision, embeddings, and function calling. This flexibility caters to various project needs, making LiteAPI versatile and applicable in myriad use-cases.

LiteAPI secures preferred contracts and credits from cloud partners, model providers, and venture capitalists. It then passes these savings directly onto its customers. This approach stands as a secondary benefit of using LiteAPI and can result in significant cost savings for the users.

LiteAPI leverages its status as an AI aggregation platform to secure preferred contracts and credits from cloud partners, model providers, and venture capitalists. These savings are past onto the customers, resulting in a 40% discount on OpenAI, Anthropic, and Google LLMs.

Switching to LiteAPI from a different API is made easy by its compatibility with the OpenAI API format. By changing the existing API endpoint, users can have a seamless transition and continue their activities without needing to rewrite their code.

LiteAPI can be used with any programming language or framework. Its completeness and flexibility facilitate seamless integration and enable it to function effectively across diverse development environments.

Anyone can obtain an API key for LiteAPI by signing up on their website. The sign-up process even includes a $20 credit, allowing users to start integrating and testing the service immediately.

LiteAPI handles data encryption and key storage by encrypting all traffic with Transport Layer Security (TLS) 1.3, ensuring secure communication. User keys are stored with Advanced Encryption Standard (AES)-256 encryption adding an extra layer of security and protecting against unauthorized access.

The unified API interface offered by LiteAPI simplifies the process of integrating with multiple LLM providers. It provides a consistent format for interacting with OpenAI, Anthropic, and Google LLMs, making the transition between providers seamless and reducing integration complexity.

Usage analytics provided by LiteAPI offer invaluable insights into requests, tokens used, response times, and costs across all connected providers. This data can empower you to optimize resource usage, improve performance, make informed decisions, and ultimately enhance the efficiency and effectiveness of your project.

LiteAPI supports a range of features including text analysis, vision capability, embedding support, and more by offering a wide array of model capabilities. These features allow you to utilize LiteAPI for myriad applications and project requirements, making it an adaptable and highly functional tool.

As an AI aggregation platform, LiteAPI is in a position to secure preferred contracts and credits from partners and pass those savings onto the customers. This results in cost-saving benefits for its users, potentially offering API credits at a discount.

LiteAPI, by virtue of its position as an AI aggregation platform, can secure preferred contracts that may vary over time due to changing deals. The cost savings that result from these preferred contracts are transferred directly to the users, providing them a beneficial economical outcome.

Integrating LiteAPI in an existing system involves changing your current API endpoint to LiteAPI's. The service's compatibility with different programming languages and frameworks, combined with its support for the OpenAI API format, allows for easy and seamless integration into pre-existing systems.

Pricing

Pricing model

Freemium

Paid options from

Free tier available

Billing frequency

Pay-as-you-go